You can write flawless Swift code, follow Apple’s Human Interface Guidelines to the letter, and still ship an iOS app that fails under real-world conditions.

Why?

Succinctly stated, the real challenge in mobile app development isn’t building features. It’s ensuring they actually work across the different devices, OS versions, and network environments your users rely on.

Testing for iOS is more than ticking off unit tests. It’s about simulating real usage, catching UI regressions before Apple does, and ensuring consistent behavior across the full device spectrum (from the iPhone SE to the latest iPad Pro). Yet, many teams still treat QA as the final hurdle to be jumped rather than a continuous, integrated practice.

Whether solo-developing your next App Store release or managing a cross-functional mobile team, this guide will help you rethink your iOS testing strategy. We’ll break down the essential test types, compare automation tools like XCUITest and Appium, discuss real vs. simulated device testing, and show how to embed quality into your CI/CD pipelines without bloating your release cycle.

The goal? To help you build faster, test smarter, and launch with confidence.

Understanding the iOS Ecosystem

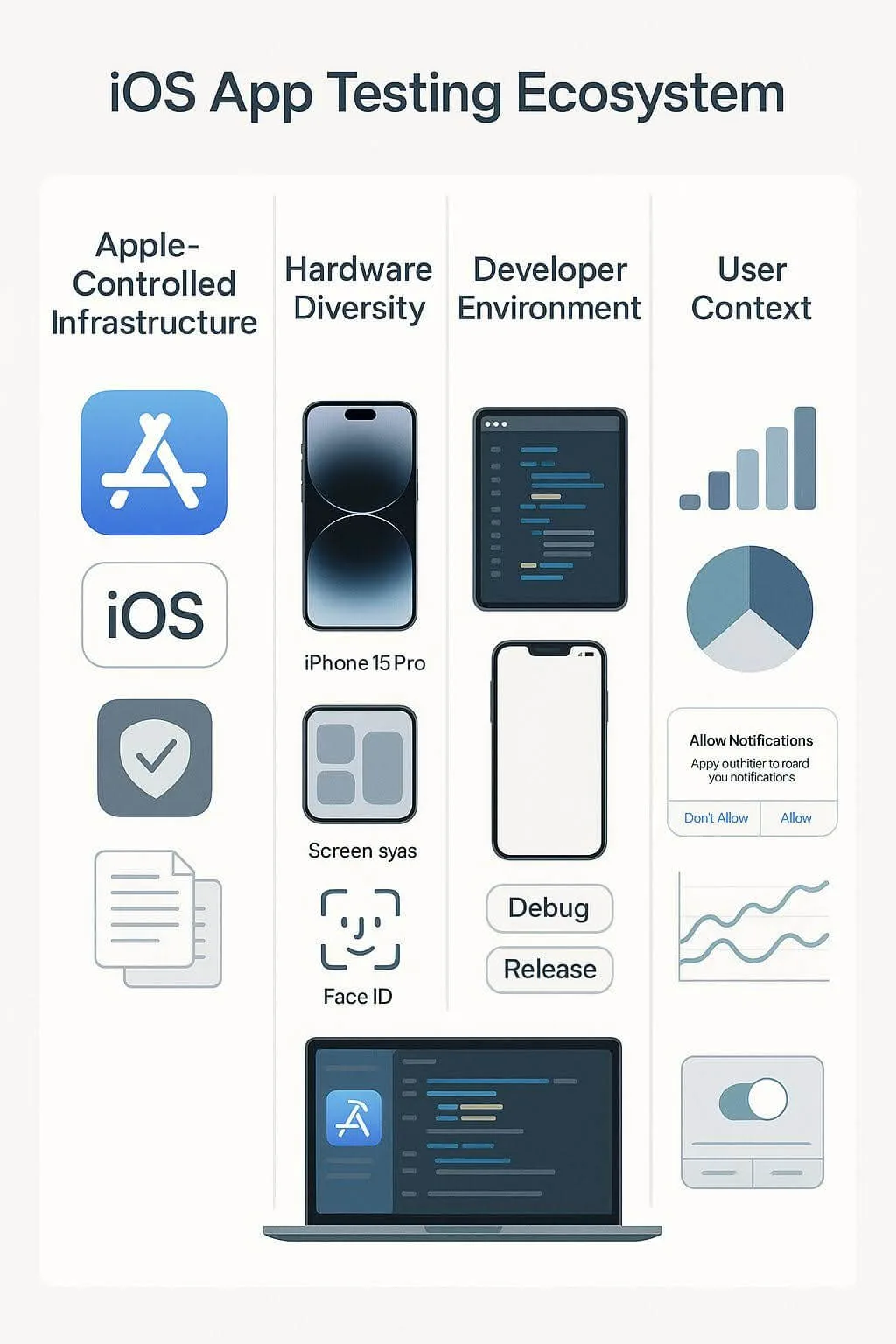

Testing iOS apps isn’t just about running scripts or checking screens. It’s about working within the constraints and expectations of Apple’s tightly controlled environment. Unlike Android, where fragmentation across devices and vendors introduces chaos, Apple offers uniformity, but with its own quirks.

1. A controlled but fast-changing landscape

Apple’s ecosystem is vertically integrated: the company controls the hardware (iPhones, iPads), the operating system (iOS), and the distribution platform (App Store). This provides developers with fewer device variants and predictable OS behaviors.

But that level of control comes at a cost:

- Frequent OS updates can silently break features.

- Strict performance and privacy guidelines enforced by App Store review.

- Limited visibility into low-level system logs and behaviors compared to Android.

Even minor OS updates can update API behaviors or UI rendering, which is why regression testing before every major iOS version release is critical.

2. Device uniformity ≠ testing simplicity

It’s a common misconception that testing for iOS is simpler because there are “fewer devices.” But here’s the nuance:

- Apple currently supports 5-7 device generations at any given time.

- Not all users upgrade their OS immediately; version fragmentation still exists.

- Screen resolutions, aspect ratios, and device capabilities (e.g., Face ID vs. Touch ID) vary widely.

This means your testing strategy must cover devices like:

- iPhone SE (small screen, older chipset).

- iPhone 15/16 (modern, high-performance).

- iPads with split-screen support and unique UI behavior.

3. Development vs. App Store realities

A critical iOS-specific challenge is that the build you test locally isn’t always what users experience in production.

- Debug builds often behave differently from release builds.

- Xcode simulators don’t accurately replicate memory usage, thermal behavior, or hardware interactions.

- Features like push notifications, background fetch, and battery usage behave differently on real devices vs. test environments.

That’s why real-device testing is non-negotiable, especially for performance-sensitive features, device sensors, or native integrations like camera, Bluetooth, or ARKit.

In summary, even with a polished development workflow, iOS testing must factor in:

- OS version fragmentation.

- Hardware variability.

- App Store submission constraints.

- Real-world user contexts.

By understanding these constraints upfront, QA teams can architect test plans beyond checklists. Moreover, they can realistically validate how the app behaves under production-like conditions.

Manual vs. Automated Testing: Choosing the Right Tool for the Right Risk

Every QA team faces the same dilemma: how do you test fast without cutting corners? The answer usually lies in how you split the load between manual and automated testing, and which one you choose when.

Take, for example, a developer working on a new onboarding sequence. Animations seem fine in the simulator, but during a manual run on an iPhone SE, they appear jittery. No automated test flagged the issue. Automation doesn’t “see” visual stutters the way humans do. This is where manual testing excels. It brings human perception, intuition, and fluidity into the QA process.

When and why manual testing still matters

Manual testing still plays a critical role in:

- First-time user experience (FTUE).

- Accessibility validations, like VoiceOver support.

- Edge cases that automation often misses.

- Beta testing through TestFlight, where human feedback surfaces subtle UX misfires.

However, manual testing doesn’t scale.

The speed, scale, and stability of automated testing

When you’re shipping updates on a weekly or biweekly cadence and targeting multiple iOS devices and versions, automation becomes crucial.

Tools like XCUITest or Appium allow teams to script and maintain extensive test suites that run consistently at scale. These frameworks support parallel execution across simulators and real devices, dramatically reducing test duration and improving feedback loops during development.

As documented by Bitrise, Sauce Labs, and BrowserStack, parallel automation is now a baseline capability for mature mobile QA pipelines.

Automation also introduces discipline. It guards against regressions, integrates with CI/CD workflows, and ensures consistency across builds, something manual runs can’t do. Tests can run automatically on every pull request (PR), on nightly builds, or before each release.

| 💬 Bugsee Team Insight “Even the most advanced automation suite can’t feel lag or notice a clunky transition. That’s why smart teams blend automation with manual QA. It’s not a trade-off; it’s a collaboration.” — Bugsee Engineering Team. |

When to choose manual vs. automated

Here’s a quick decision matrix:

| Task/Risk Area | Manual Testing | Automated Testing |

|---|---|---|

| UX & Visual Regression | Best for UI nuance | Not suitable |

| Functional Test Coverage | Limited | High scalability |

| Accessibility Checks | Required | Not supported |

| Regression in CI/CD Pipelines | Time-consuming | Ideal use case |

| Beta Feedback via TestFlight | Crucial for UX signals | Not applicable |

| Performance Benchmarking | Subjective insights | Scriptable, repeatable |

Manual testing uncovers what automation can’t. Automation scales what manual testing can’t. The goal isn’t choosing between them; it’s orchestrating both to match your app’s complexity, team capability, and release velocity.

Next, we’ll explore the core layers of iOS tests: unit, integration, UI, regression, and how each fits into a layered QA strategy for shipping quality code with confidence.

Testing Types Explained

Testing isn’t a single activity. It’s a layered discipline. To build confidence in an iOS release, teams rely on a variety of test types, each targeting a different risk. From the smallest unit of code to full UI flows, knowing what to test, where, and how can dramatically reduce bugs in production and improve engineering velocity.

In this section, we’ll break down the core testing types used in iOS development and explain where each fits into a scalable QA strategy.

1. Verifying the building blocks with unit tests

Unit tests validate the smallest testable parts of your code; typically, individual functions, methods, or classes. They’re fast, easy to automate, and form the foundation of test-driven development (TDD).

In iOS, unit tests are typically written using XCTest, Apple’s native testing framework. These tests make sure logic works as expected, including features like currency conversion, data formatting, or validation rules.

Use unit tests to:

- Catch regressions early in the dev cycle.

- Validate logic branches and boundary conditions in business rules.

- Build confidence during refactors.

For example:

swift

func testTotalPriceWithTax() {

let cart = Cart()

cart.addItem(price: 10.00)

XCTAssertEqual(cart.totalPriceWithTax(), 10.80)

}2. Making sure components play well together with integration testing

While unit tests validate isolated functions, integration tests ensure that multiple parts of the app (such as the UI, network, and database) work together as expected. These tests are especially useful for detecting issues caused by API schema changes, dependency conflicts, or state management bugs.

Integration testing can be done (in iOS) using XCTest or Appium, often simulating real workflows like logging in, syncing data, or navigating through a feature set.

Use integration tests to:

- Validate how modules communicate.

- Test multi-step user workflows.

- Catch environment or configuration issues in staging.

3. Ensuring interfaces work as designed with UI testing

UI tests simulate user interactions: tapping buttons, entering text, and swiping through screens. In iOS, XCUITest is the go-to framework for UI testing. It integrates tightly with Xcode and can execute tests across simulators and real devices.

However, UI tests can be brittle and require thoughtful structure to remain maintainable over time. They‘re especially prone to breaking when layout or view hierarchy changes occur, even if the underlying functionality hasn’t changed.

That’s why experts recommend assigning accessibility identifiers to key UI elements. These identifiers act as persistent anchors across UI changes, improving selector stability. As highlighted in this Repeato guide, Eleken’s 2025 testing guide, and Stack Overflow, robust identifier use is critical to maintaining test resilience.

Use UI tests to:

- Validate user flows like onboarding or checkout.

- Confirm rendering consistency across devices.

- Verify accessibility functionality (when paired with manual checks).

| 💡 Best Practice Tip Always assign accessibility identifiers to key UI elements (buttons, labels, input fields), especially in critical workflows. These identifiers decouple your test logic from the visual structure, making your tests far more resilient to UI redesigns and refactors. |

4. Guarding against backsliding with regression testing

Regression tests are less about what’s being tested and more about why. They exist to ensure that previously fixed bugs don’t reappear in future builds—a risk that grows with every code change.

Regression tests can include unit, integration, UI, or even manually maintained test cases, and are essential in the later stages of development and release.

Regression testing is especially critical:

- After major refactors.

- Before shipping to production.

- When upgrading to a new iOS version.

| 💡 Pro Tip from the Bugsee Team “We’ve seen the same crash resurface months after a hotfix, not because it wasn’t fixed, but because the regression suite didn’t include a test to catch it. QA debt can be just as dangerous as tech debt. ” — Bugsee QA Team. |

Test types in a layered QA strategy

Here’s how different test types typically align with a CI/CD pipeline:

| Layer | Test Type | Scope | Speed | Frequency |

|---|---|---|---|---|

| Foundation | Unit Tests | Single functions | Fast | Every commit/PR |

| Integration Layer | Integration Tests | Multi-module functions | Medium | Pre-merge builds |

| UI Layer | UI Tests | End-to-end workflows | Slower | Nightly/pre-release |

| Validation Layer | Regression Tests | Known issues | Variable | Before each release |

Putting test types into practice

Each type of test (unit, integration, UI, and regression) plays a distinct role in safeguarding your app’s quality. But writing tests isn’t enough. Where and how they run can make or break their effectiveness.

In the next section, we’ll look at test execution environments and why choosing between simulators and real devices is more than a matter of convenience.

Simulators vs. Real Devices

Now you know what to test, the next question is where those tests should run.

For iOS developers, the choice typically falls between Xcode simulators and physical Apple devices. Both environments play a role in the QA pipeline, but they differ dramatically in fidelity, performance, and trustworthiness.

1. Testing with Simulators

Simulators (built into Xcode) are a vital part of any developer’s toolkit. They launch quickly, cost nothing to scale, and are tightly integrated with test frameworks like XCUITest and XCTest.

They’re ideal for:

- Rapid prototyping and UI iteration.

- Basic logic validation and flow control.

- Testing on devices you don’t own (e.g., older iPhones or iPads).

But despite their convenience, simulators have critical blind spots:

- They don’t simulate real-world performance factors like thermal throttling, memory pressure, or battery drain.

- They often lack support for camera, Bluetooth, biometrics, or hardware sensors.

- Networking conditions are idealized—no dropped packets, latency spikes, or signal loss.

As noted in Apple’s documentation, simulators “approximate behavior” but are not substitutes for real hardware testing.

2. Testing on physical devices

Testing on physical iPhones and iPads is more time-consuming, but it delivers the only truly accurate picture of how your app behaves in production.

Real devices expose issues that simulators miss, like:

- Thermal and memory-related crashes (especially on older hardware).

- Authentication and biometric edge cases (e.g., Face ID failures).

- App behavior under backgrounding, multitasking, or airplane mode.

- Push notifications and deep linking across app states.

A performance regression might pass in the simulators but become obvious on an iPhone 11 running iOS 16 under heavy CPU load. That’s why mature teams test on a diverse set of real devices, often automated through services like Firebase Test Lab, BrowserStack, or AWS Device Farm.

As BrowserStack explains, real-device clouds provide broad coverage without needing a physical device lab—a scalable solution for distributed QA teams.

Matching testing environments to stages

Here’s a quick matrix to help you decide where each type of testing best fits:

| Testing Stage | Simulators | Real Devices |

|---|---|---|

| Unit Testing | ✅ | ❌ |

| UI Testing (basic flows) | ✅ | ✅ |

| Sensor-dependent testing | ❌ | ✅ |

| Beta testing/FTUE | ❌ | ✅ |

| Crash, memory, and CPU testing | ❌ | ✅ |

Simulators are fast, flexible, and great for catching obvious bugs early. But they aren’t real. Real-device testing is non-negotiable if you’re serious about performance, reliability, and shipping user-ready apps. The best strategies use both: simulators for speed, devices for truth.

| 💡 Pro Tip Crashes often appear only on real hardware under real load. Simulators might give green lights, but device testing reveals what’s truly production-ready. |

Where Runtime Observability Tools Fit in the iOS Lifecycle

Automated tests, CI/CD pipelines, and real-device testing build a solid QA foundation. But even the most rigorous pre-release testing can’t account for every condition your app will face in the wild, especially once it’s in the hands of real users.

That’s where runtime observability tools come in.

These tools embed directly into your app and capture rich content around crashes, performance issues, and user-reported bugs. When something breaks, whether during TestFlight, staging, or in production, tools like Bugsee capture:

- A video replay of what the user experienced.

- Touch events, network calls, and console logs.

- System-level state, such as memory, CPU, device orientation, and connectivity.

- Any custom events or metadata you choose to track.

This data is automatically routed to your bug tracker or issue management tools (Jira, Slack, GitHub), giving developers everything they need to close the loop between bugs and fixes.

Try it for yourself: start your 30-day free trial — setup takes minutes.

Where runtime observability fits into the QA pipeline:

| QA Layer | Purpose | Tools & Role |

|---|---|---|

| Unit & Integration Testing | Validate logic & core functionality | XCTest, Appium, XCUITest |

| CI/CD Execution | Automate and enforce coverage | GitHub Actions, Jenkins, Bitrise |

| Device Environments | Surface behavior in real-world conditions | Simulators, real devices |

| Runtime Observability | Captures crashes and user behavior post-test | Bugsee |

| Issue Management | Route actionable data to dev teams | Jira, Slack, Trello, GitHub |

You can build a flawless, comprehensive test suite and still miss the one path that causes a crash in production. Observability doesn’t replace testing. It extends its reach into real-world conditions, making sure that what slips past QA (and staging) doesn’t go undetected in the wild.

| 💡 Pro Tip With runtime capture, it is no longer necessary to piece together bug reports from partial screenshots, vague steps, or third-hand feedback. You get complete visibility — instantly. |

In Conclusion…

Effective iOS testing doesn’t start with tools. It starts with strategy.

From choosing the right test types and environments to knowing when to automate and when to explore manually, today’s QA teams must build systems that scale without sacrificing insight. And as your app moves from development to production, having visibility into how it performs in the real world becomes just as critical as what happens in staging.

The most successful teams don’t rely on a single layer of defense. They blend:

- Manual and automated testing.

- Simulators and physical devices.

- CI/CD pipelines and runtime observability.

Together, these layers create the kind of coverage that can support fast releases and high-quality user experiences, even in the unpredictable reality of mobile.

FAQs

1. What’s the difference between unit, integration, and UI testing in iOS?

The differences between these three test types are as follows:

- Unit tests validate individual functions or methods in isolation.

- Integration tests check how multiple components react with each other.

- UI tests simulate user interactions to ensure the app behaves correctly from the end user’s perspective.

2. Do I really need to test on real devices if simulators are available?

Yes. Simulators are fast and convenient, but they can’t replicate real-world performance, battery behavior, or hardware-specific issues. Real-device testing is essential for shipping reliable apps.

3. What is runtime observability, and how does it help QA?

Runtime observability tools, such as Bugsee, record what happens in the app during user sessions or crashes, capturing logs, videos, and state data. It’s like a flight recorder for mobile apps, helping developers diagnose bugs without having to reproduce them manually.

Want to see what your testers see—without asking them to explain it? Try it in your QA workflow — 30 days free, no card required

4. Can automated tests completely replace manual testing?

No. While automated tests offer speed and consistency, manual testing is still essential for validating UX, accessibility, and edge cases that require human judgment.