Most teams treat testing like a final checklist before launch: One last sprint to squash bugs, greenlight QA, and ship. But that mindset is costing companies more than they realize.

In modern mobile development, testing isn’t a phase. It’s a full-cycle discipline that starts with your prototype and continues long after your app hits the store. And it’s not just for QA engineers. Testing touches product decisions, UX design, engineering practices, and user trust.

Done well, testing isn’t just about catching bugs. It’s how teams:

- Validate that the app works as intended (functional testing).

- Understand how people use it (usability testing).

- Detect real-world issues that never show up in QA (post-release testing).

This article breaks down the real testing lifecycle behind successful mobile apps—from internal builds to production monitoring. Along the way, we’ll explore how teams structure their testing layers, where automation fits in, and why tools like Bugsee help capture the reality QA environments can’t replicate.

Let’s redefine what the app testing stage actually means and why it matters to your roadmap, your users, and your product’s future.

The Testing Phase Myth

For years, testing was seen as the final step—the last stop before an app went live. Developers built, designers polished, and QA cleaned up the leftovers. Then, the product was launched.

That model no longer holds up in modern app development.

Apps now ship faster, release cycles are shorter, and expectations are higher. Teams can’t afford to wait until the end to discover that something’s broken or, worse, that it works but no one knows how to use it. Testing has evolved into a parallel, full-lifecycle discipline woven throughout design, development, deployment, and beyond.

In practice, that means testing touches nearly every stage:

- During sprints: Validating new features with unit, integration, and UI tests.

- During design: Prototyping user flows and gathering early feedback.

- During builds: Automating test suites in CI/CD pipelines.

- After release: Tracking real-world behavior and uncovering hidden bugs.

And that’s not just QA’s job anymore.

Developers write unit tests. Designers run moderated usability sessions. Product managers analyze heat maps and feedback loops. Testing isn’t a handoff. It’s a shared responsibility.

The biggest shift? Testing no longer answers the question of “Does it work?” It now also asks:

- “Is it usable?”

- “Will it scale?”

- “What happens in the wild that we missed in QA?”

Understanding this mindset is foundational because, without it, the rest of your testing strategy becomes reactive instead of resilient.

In the next section, we’ll discuss the three layers of a modern mobile testing stack and why each plays a different role in building high-quality apps.

The Three Layers of a Mobile Testing Stack

Most teams think of testing as a single activity. But in reality, it’s a stack of overlapping processes. Each layer serves a different purpose, relies on different tools, and asks a different kind of question.

These layers aren’t strictly sequential. In modern mobile app development, they operate in parallel, active throughout sprint cycles, staging builds, and long after release.

1. Functional testing

“Does the system behave as expected?”

Functional testing is the foundation of any mobile testing stack. It validates whether individual components, integrated modules, and full workflows operate according to your app’s defined requirements.

This layer includes:

- Unit tests

- Integration tests

- Regression tests

- Smoke tests

Together, these methods form the safety net that prevents breakages, logic errors, and unexpected side effects as the codebase evolves. While functional testing can involve both manual and automated processes, automation is key to making this layer scalable and reliable, especially when integrated into CI/CD pipelines.

2. Usability testing

“Can real people succeed with the app?”

Just because a feature works doesn’t mean it’s usable. Usability testing focuses on how real users interact with your product—where they succeed, where they hesitate, and where they get stuck.

This testing layer asks questions like:

- Do users know what to do next?

- Can users complete tasks with minimal friction?

- Are they abandoning flows before reaching the goal?

While usability testing often involves design and product teams, its impact extends across engineering and QA. These insights reveal issues that no amount of functional testing can catch.

3. Post-release testing

What breaks in the wild?

Pre-launch testing can’t predict everything. Once your app is in the hands of real users, on real devices, in real-world conditions, new problems emerge, ones you never saw in QA.

This layer focuses on observing how the app performs in production:

- What crashes under unexpected use?

- What devices behave differently?

- Where do users drop off or encounter friction?

Post-release testing gives teams visibility into runtime behavior and failures in the field. It’s not a replacement for QA, but it fills the gap between internal testing and real-world usage.

Next, we’ll dive into the functional testing layer and how to structure it for long-term coverage and engineering velocity.

How Functional Testing Anchors the Mobile Testing Lifecycle

While usability or production issues often surface unpredictably, functional testing provides a structured foundation on which teams can count. It doesn’t just verify behavior. It stabilizes it. By defining how features should operate and enforcing those expectations through tests, functional testing protects the integrity of your app as it evolves.

What functional testing looks like in practice

Functional testing verifies specific units, integrations, and workflows against defined requirements. Its intention is to catch regressions, enforce logic, and give developers the confidence to ship quickly.

1. Unit tests (e.g., verifying input/output behavior)

// Swift (XCTest)

func testLoginSuccess() {

let result = AuthService.login(username: "user", password: "secure123")

XCTAssertTrue(result.isSuccess)

}

// Edge case: invalid input

func testLoginFailsWithEmptyPassword() {

let result = AuthService.login(username: "user", password: "")

XCTAssertFalse(result.isSuccess)

XCTAssertEqual(result.error, .missingPassword)

}Unit tests like these validate expected logic under ideal conditions and extend easily to edge cases, invalid input, and error states. They help expose silent failures early and form the first layer of defense in your test suite.

2. Integration tests (e.g., verifying a flow across modules)

// Kotlin (Espresso + MockWebServer)

@Test

fun testUserProfileLoadsCorrectly() {

mockWebServer.enqueue(MockResponse().setBody(mockUserProfile))

onView(withId(R.id.loadProfileButton)).perform(click())

onView(withId(R.id.userName)).check(matches(withText("Jane Doe")))

}These tests confirm that the UI, network layer, and data bindings all work together as expected, something unit tests alone can’t guarantee.

3. Smoke tests (e.g., verifying high-level workflows)

These typically run on every build via CI, ensuring the app launches and performs core tasks before deeper test runs.

yaml

# GitHub Actions - Basic smoke test step

- name: Run Smoke Tests

run: ./gradlew connectedDebugAndroidTest| 💡 Frameworks, CI, and Developer Velocity Automated functional testing becomes even more powerful when wired into your build pipeline. Tools like XCTest (iOS), Espresso (Android), Appium, and Detox (React Native) allow teams to maintain coverage across platforms while surfacing issues early in the cycle. Most importantly, functional tests aren’t just a safety measure. They are a velocity multiplier. When your team trusts its test coverage, it can ship faster, refactor more aggressively, and iterate faster without fear of breaking something critical. |

Why Usability Testing Exposes What QA Can’t

Just because a feature works doesn’t mean people can use it. Usability testing isn’t about logic. It’s about friction and how real people experience your app.

It answers questions that functional tests can’t:

- Are users confused when they land on the screen?

- Do they know what to tap next?

- Can they complete tasks without hesitation, or do they get stuck halfway?

Why usability testing matters

Mobile users make decisions in seconds. If an interface is unclear (even slightly), people bounce. And they don’t leave feedback—they just leave.

This is what makes usability testing so critical. It validates design assumptions behind your features before (and after) your app ships.

Unlike crash logs or test failures, usability problems often show up as:

- High bounce rates.

- Low task completion.

- Unexplained drop-off in funnels.

And they are often invisible unless you’re actively watching how users behave.

How it works in Practice

Usability testing can happen through the development lifecycle. Common methods include:

- Prototype testing: Click-through tests on early mockups using tools like Figma, Maze, or UserTesting.

- Moderated testing: Observe users performing key flows while narrating their thought processes.

- Unmoderated testing: Send tasks to real users and gather heat maps, click data, and success metrics.

- A/B testing: Compare variations to measure which design performs better.

- Funnel analysis: Tracking drop-off through multi-step flows (e.g., onboarding, checkout).

No one method catches everything, but together, they paint a picture of usability that QA tests can’t simulate.

Who owns this layer?

Designers and project managers often lead usability testing, but developers benefit from the findings. A button that’s technically “clickable” but visually hidden isn’t just a design flaw; it’s a project issue. The more technical teams understand usability problems, the more they can prevent them in future builds.

| 💡 Takeaway Functional testing tells you if the app works. Usability testing tells you if people can actually use it. Both are crucial, but only one reveals how humans behave. |

What Post-Release Testing Reveals that QA Misses

No matter how comprehensive your QA process is, real users will always uncover scenarios you didn’t anticipate. The range of device types, OS versions, connectivity environments, and usage patterns in production are simply too broad to simulate in test labs.

This is where post-release testing plays a significant role, not as a formal testing phase, but as a system of continuous observability.

Why testing doesn’t stop at release

Pre-launch QA verifies intent. But once your app ships, users start generating behavior you never predicted. They:

- Skip onboarding entirely.

- Tap elements before animations finish.

- Run your app on outdated firmware or low-memory devices.

These edge conditions reveal a class of failures that rarely show up in structured QA environments.

Crashes tied to race conditions (issues when two asynchronous operations access shared resources simultaneously and execute in an unpredictable order) are notoriously hard to reproduce in QA but emerge quickly in real-world conditions.

Other problems are equally elusive: latency spikes that cause screens to freeze or poorly optimized flows that users abandon halfway through. Production testing isn’t scripted; it’s emergent. And without visibility into these failures, teams are left flying blind.

What Observability tells you that logs don’t

To make sense of what users are experiencing, teams need more than crash reports. Effective post-release testing involves capturing:

- Crash traces and logs linked to specific device states and OS conditions.

- Session replays that visualize user interaction before, during, and after failure.

- Touch input data, navigation paths, and rendered UI state.

- Console output, network transactions, and background activity timelines.

This data enables teams to recreate issues without needing to reproduce them manually. It also helps prioritize bugs not just by severity but by frequency and user impact.

How Bugsee captures crashes, context, and user behavior

Bugsee equips mobile teams with full observability after deployment, automatically capturing what really happens when something breaks in production.

When a crash or critical event occurs, Bugsee records:

- A visual replay of the UI.

- The user’s last interactions.

- Logs, console output, and network traffic.

- Device and OS context.

Bugsee begins capturing production insights when your app goes live; no manual instrumentation is required. It integrates seamlessly across iOS, Android, Flutter, React Native, Cordova, and Xamarin. Try it free in your stack in minutes—no setup friction.

| 💡 Strategic insight — Beyond Triage Leading teams don’t treat post-release testing as damage control. They treat it as a growth signal. Session analytics reveal where users hesitate or abandon tasks. Repeated crashes on specific devices inform test coverage gaps. UI replays highlight patterns that usability tests missed. With the right tools, production observability drives roadmap decisions, not just bug fixes. |

The goal of post-release testing isn’t just to fix bugs after the fact. It’s to close the feedback loop between lab conditions and real-world behavior. Teams that use production insights proactively can prioritize fixes based on actual impact, improve release stability, and align future updates with reality, not theory.

CI/CD, Automation, and the Testing Feedback Loop

For modern engineering teams, testing doesn’t end with a green checkmark. It continues after the code is deployed, through every crash, edge case, and unexpected user path. That’s why the most resilient mobile stacks no longer treat testing as a gate. They treat it as a feedback loop.

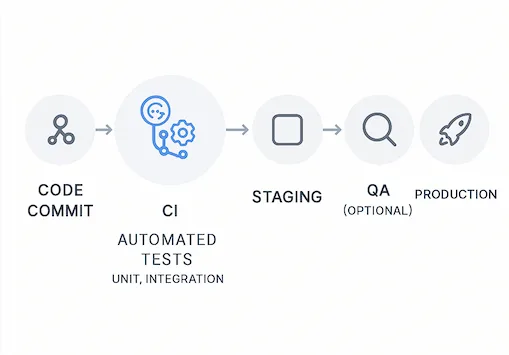

In mature CI/CD environments, functional tests are integrated directly into the build pipeline. Code commits trigger automated test suites, validate critical paths, and catch regressions before they ever reach staging. Paired with tools like GitHub Actions, Bitrise, or GitLab CI, this setup enforces consistency and repeatability without slowing velocity.

But automation alone isn’t enough.

Post-release data from real users closes the loop, surfacing issues missed in controlled environments and pointing QA toward blind spots. When crash replays, touch traces, and session context are captured automatically, testing becomes self-correcting. Each release strengthens the next.

Tools like Bugsee play a pivotal role here, not just by exposing production failures but by giving engineers the full context to resolve them quickly. That visibility supports more than triage. It informs test coverage strategy, usability refinement, and architectural decisions. Curious how Bugsee fits into your QA and coverage strategy? Try it free for 30 days. No credit card needed.

A well-instrumented CI/CD pipeline isn’t just a delivery mechanism. It’s a living system fed by test automation, accelerated by fast feedback, and shaped by real-world usage.

Conclusion

Mobile teams used to ask, “Did we test everything?”

Now they ask, “Which testing layer caught what—and what slipped through?”

This shift reflects how mobile testing has evolved from a one-time QA phase to a continuous, layered system that spans design, development, deployment, and production.

Functional, usability, and post-release testing aren’t competing priorities. They’re interdependent disciplines, each revealing different truths about your app’s quality and user experience. Together, they form a full-spectrum feedback loop that improves with every release.

The teams that embrace this mindset don’t just catch bugs. They learn from them. They validate early, observe post-launch, and use that insight to continually harden their architecture and improve their product.

Tools like Bugsee help close the loop by making production crashes and user sessions visible without adding overhead. That visibility gives developers the power to fix what matters, fast. It helps QA shift from reactive bug hunts to proactive coverage strategies. And it gives leadership confidence in the stability and usability of every new release. Start your free 30-day trial and bring production visibility into every release.

Testing today isn’t about perfection. It’s about visibility, velocity, and the ability to adapt. Teams that invest in this layered, full-cycle testing ship better apps—faster, smarter, and more reliably than ever before.

FAQs

1. What’s the difference between STLC and SDLC in mobile development?

SDLC (Software Development Lifecycle) refers to the entire process of creating software, from initial planning and requirements gathering through design, development, deployment, and maintenance.

STLC (Software Testing Lifecycle) focuses solely on the structured phases of testing. These typically include requirement analysis, test planning, test case development, environment setup, test execution, and test cycle closure.

While SDLC covers the “what” and “how” of building software, STLC ensures quality through validation and verification. In modern mobile development, testing is no longer confined to a single phase. Instead, STLC practices are integrated throughout the SDLC via automation, CI/CD, and observability tools.

2. What are the phases of mobile app testing?

The most effective mobile testing strategies follow a three-layer structure:

- Functional testing: Verifies whether features and logic work as intended.

- Usability testing: Evaluates how real users navigate and experience the app.

- Post-release testing: Captures performance, crashes, and edge cases in production.

These layers operate in parallel to catch different types of issues throughout the development lifecycle.

3. Why is it important to test your mobile apps continuously?

Mobile testing isn’t a one-time event. Continuous testing helps teams catch bugs earlier, validate new features faster, and understand how apps behave in the wild. As devices, OS versions, and user behaviors evolve, ongoing testing ensures long-term stability and usability. Tools like Bugsee extend this visibility into production, giving developers the context they need to fix issues fast and improve over time.